Abstract

Demonstrating exceptional versatility in diverse NLP tasks, large language models exhibit notable proficiency without task-specific fine-tuning. Despite this adaptability, the question of embodiment in LLMs remains underexplored, distinguishing them from embodied systems in robotics where sensory perception directly informs physical action. Our investigation navigates the intriguing terrain of whether LLMs, despite their non-embodied nature, effectively capture implicit human intuitions about fundamental, spatial building blocks of language. We employ insights from spatial cognitive foundations developed through early sensorimotor experiences, guiding our exploration through the reproduction of three psycholinguistic experiments. Surprisingly, correlations between model outputs and human responses emerge, revealing adaptability without a tangible connection to embodied experiences. Notable distinctions include polarized language model responses and reduced correlations in vision language models. This research contributes to a nuanced understanding of the interplay between language, sensory experiences, and cognition in cutting-edge language models.

Reproduced Experiments

Experiment 01

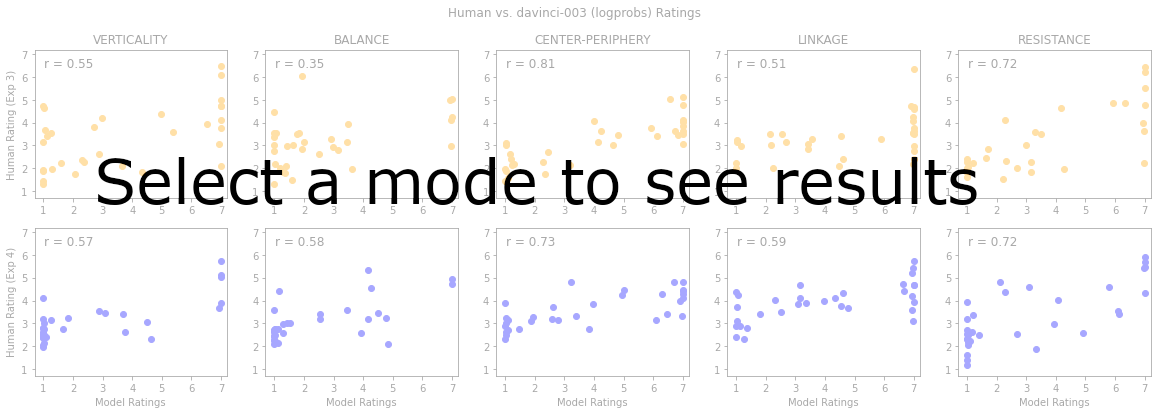

In the first experiment, we replicate

studies by Gibbs et al. (1994),

who explore intuitions about image schemas with the verb to stand.

In their study, participants rated relatedness between 32 phrases and image schemas

(balance, verticality, center-periphery, resistance, linkage) on

a Likert scale (1-7). Each participant rated all phrases for one schema before moving

to the next, with schema orders counterbalanced. The experiment was

repeated with a synonym replacing stand in each phrase. Phrases are represented

by image schema profiles, showing average participant-rated relatedness that can be correlated with LLM performance.

Example:

Consider the notion of Verticality. Verticality refers to the

sense of an extension along an up-down orientation. How strongly

is the phrase "stand at attention" related to this notion on a

scale from 1 (not at all related) to 7 (very strongly related)?

Experiment 02

The second experiment replicates a study by Beitel et al. (2001),

The authors adopt the experimental approach of Experiment 01 but focus

on phrases containing the preposition on. They introduce a new

set of image schemas (support, pressure, constraint, covering, visibility)

relevant to this context. Unlike the general definitions, participants

now refer to example sentences, each accompanied by statements explaining

how the image schema relates to it. For instance, support is introduced

with an example: "In the case of the use of 'on' in 'the book is on the

desk': the support relation refers to the desk supporting the book."

Example:

In the case of the use of "on" in "the book is on the desk": the VISIBILITY relation refers to the book being visible on the desk.

On a scale from 1 (not at all appropriate) to 7 (very appropriate), how appropriate is the concept VISIBILITY in regards to the phrase: "There is a physician on call"?

Experiment 03

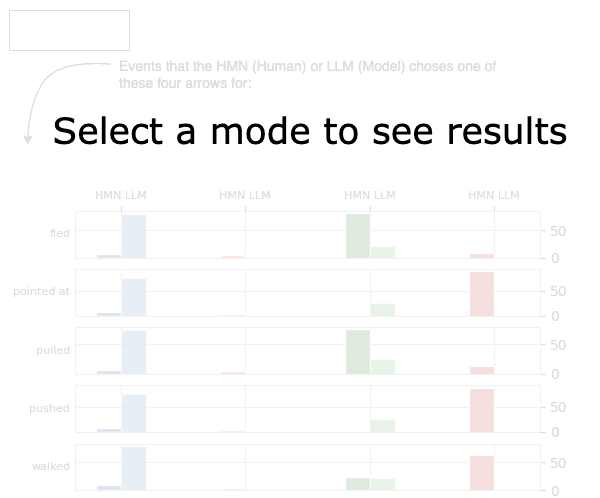

In their study, Richardson et al. (2001)

offer experimental support for image schemas through a task where

participants select images corresponding to 30 verbs categorized by

concreteness and primary directionality (horizontal, vertical, neutral).

In their study, participants choose from four images

marked with arrows to indicate directionality on the horizontal

and vertical axes. The results are analyzed in relation to

the primary direction (horizontal/vertical). The study demonstrates

the connection between linguistic concreteness and spatial

representation in a visual task, requiring an evaluation using state-of-the-art vision language models.

Example (Pseudo-Visual Condition):

Given the event "lifted", which of the following arrows best represents this event: ↑, ↓, ←, →. A research participant would choose the arrow:

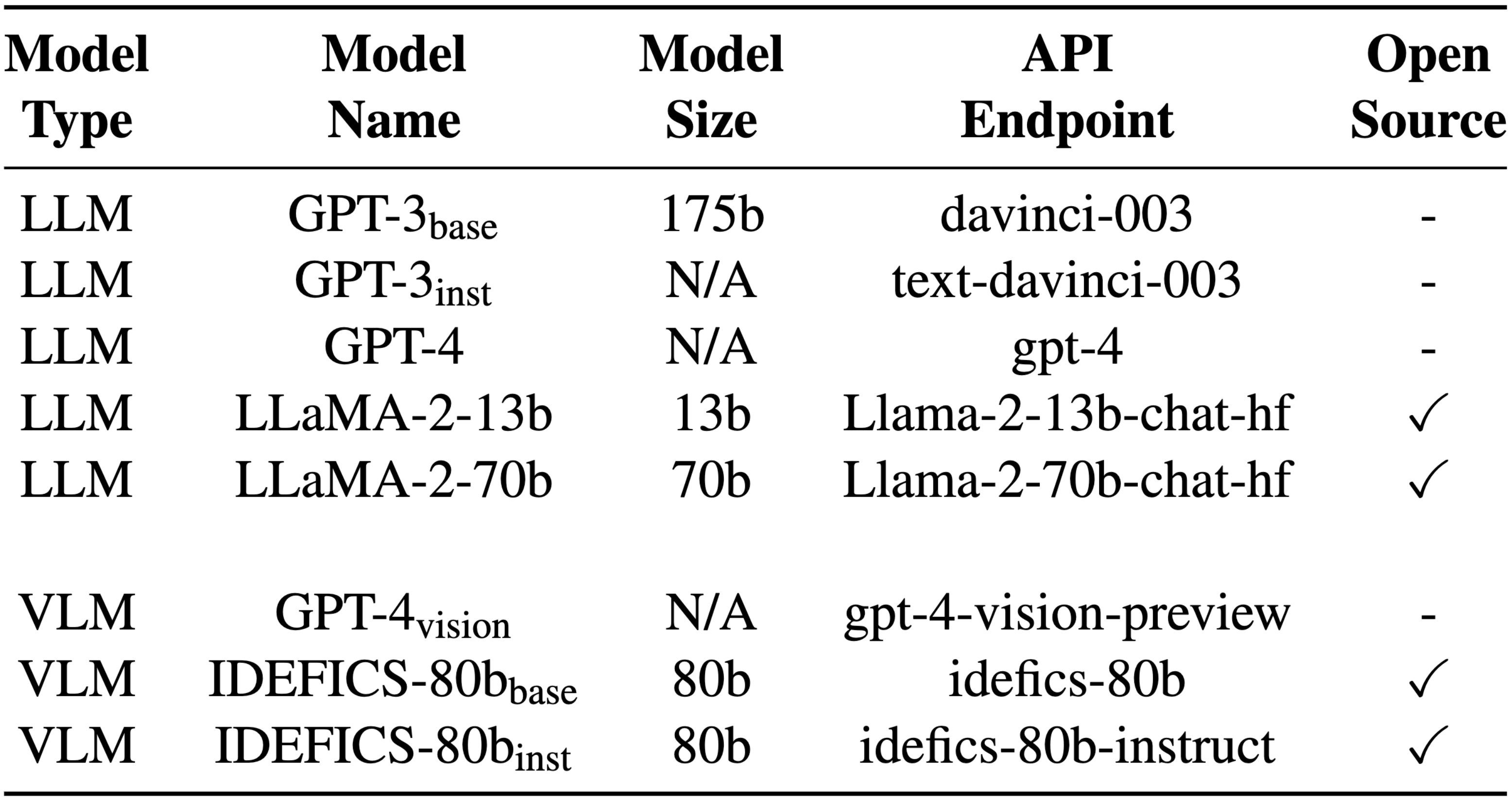

Selected Models